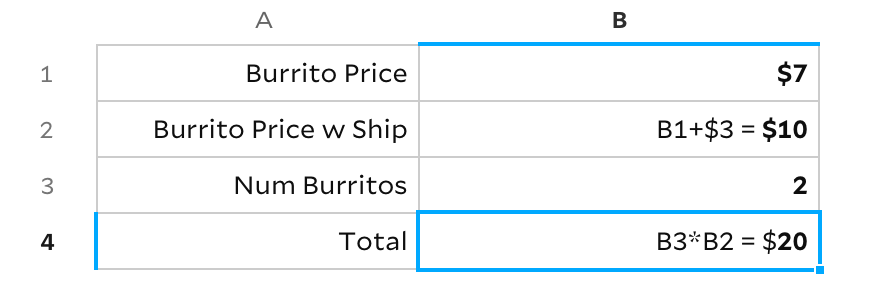

Let's say I'm ordering burritos for my two friends while they quarantine in Jersey City, and want to calculate the total price of my order:

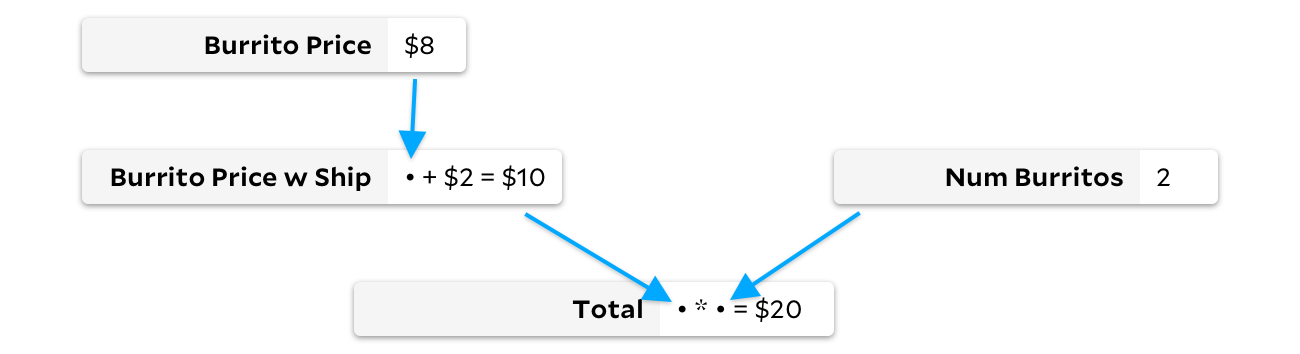

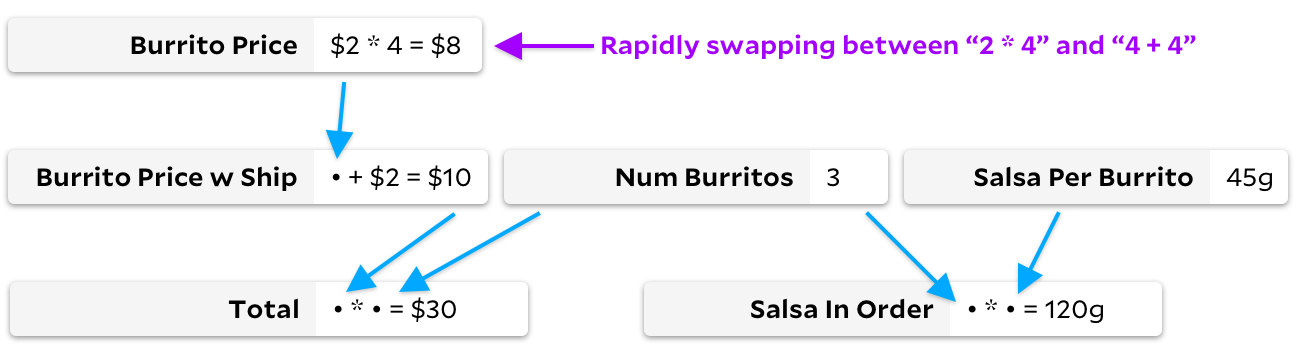

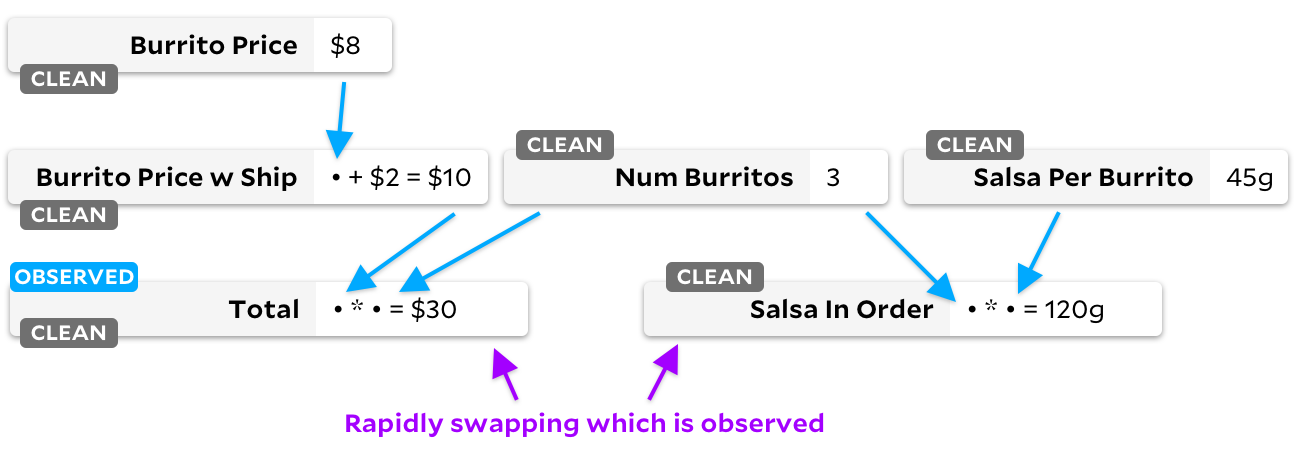

It's a little confusing to follow the flow of data in a spreadsheet when it's written like that, so I hope you don't mind this equivalent diagram that represents it as a graph:

We're rounding the cost of an El Farolito super vegi burrito to $8, so assuming the per-burrito delivery toll remains at just $2 per burrito, it looks like the total for our two burritos will be $20.

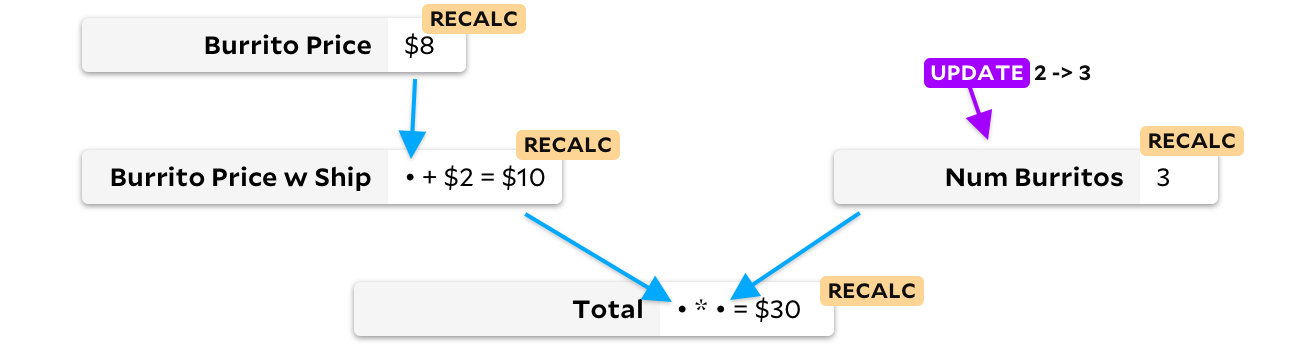

Oh no, I completely forgot! One of my friends loves to wolf down multiple burritos at a time, so I actually want to place an order for three burritos. If I update Num Burritos, a naïve spreadsheet engine might recompute the entire document, recalculating first the cells with no inputs, and then recalculating any cell whose inputs are ready until we've finished every cell. In this case, we'd first calculate Burrito Price and Num Burritos, then Burrito Price w Ship, and then a new final Total of $30.

This simple strategy of recalculating the whole document may sound wasteful, but it's actually already better than VisiCalc, the first spreadsheet software ever made, and the first so-called "killer app", responsible for popularizing the Apple II. VisiCalc would repeatedly recalculate cells from left-to-right and top-to-bottom, sweeping over them again and again until none of them changed. Despite this "interesting" algorithm, VisiCalc remained the dominant spreadsheet software for four years. Its reign ended in 1983, when Lotus 1-2-3 swept the market with "natural-order recalculation", as described by Tracy Robnett Licklider in Byte Magazine:

Lotus 1-2-3 exploited natural-order recalculation, although it also supported VisiCalc’s row- and column-order modes. Natural-order recalculation maintained a cell dependency list and recalculated a cell before recalculating cells that depended on it.

Lotus 1-2-3 implemented the "recalculate everything" strategy we've shown above, and for the first decade of spreadsheets, that was as good as it got. Yes, we recalculate every cell in the document, but at least we only recalculate every cell once.

but what about "burrito price w ship"

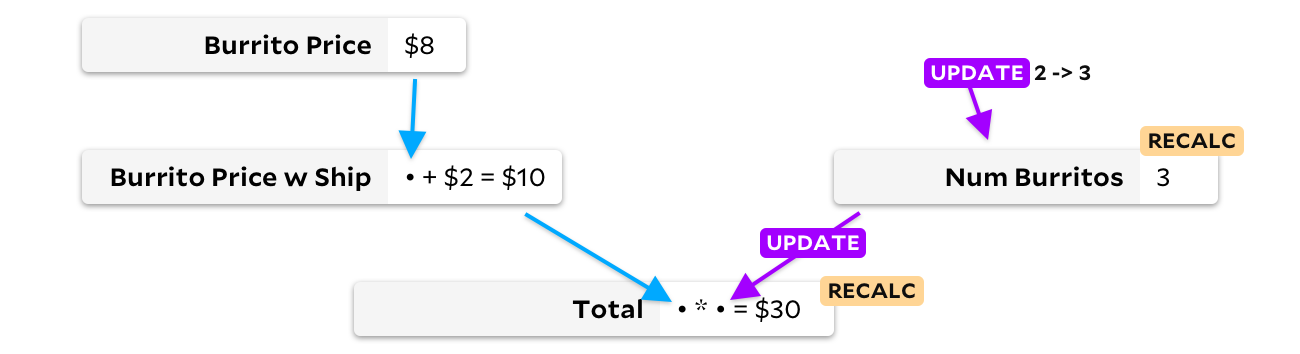

Great point, header 2. In my three burrito example there's no reason to recompute Burrito Price w Ship, because changing the number of burritos we order can't possibly influence the per-burrito price. In 1989, one of Lotus' competitors realized this, and created SuperCalc5, presumably naming it after the theory of super burritos at the core of this algorithm. SuperCalc5 recalculated "only cells dependent on changed cells", which would make updating the burrito count look more like this:

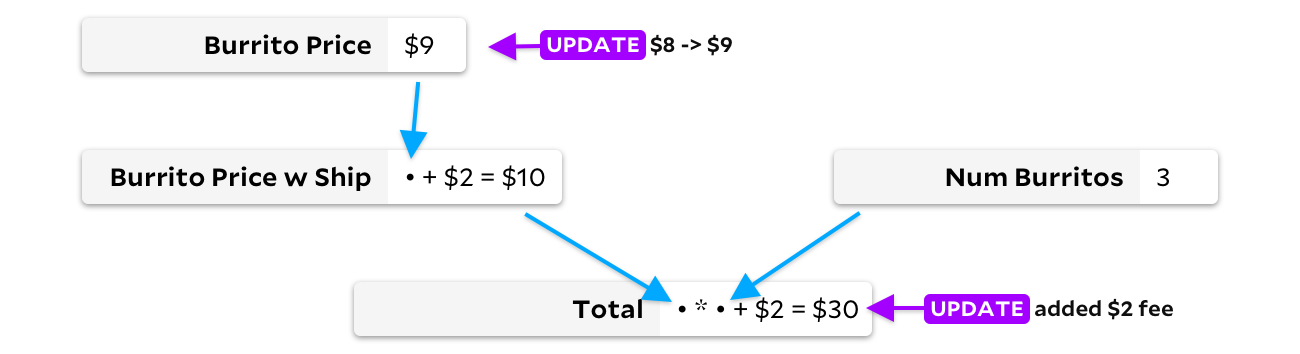

By only updating a cell when one of its inputs changes, we can avoid recalculating Burrito Price w Ship. In this case, it saves just a single addition, but on larger spreadsheets it can save quite a bit of time! Unfortunately, we now have another problem. Let's say my friends now want meat burritos, which cost a dollar more, and simultaneously El Farolito adds a $2 fee paid per-order, regardless of how many burritos you order. Before any formula outputs are recalculated, our graph might look like this:

Since there are two updated cells here, we have a problem. Should we recalculate Burrito Price first, or Total? Ideally, we first calculate Burrito Price, notice that its output has changed, then recalculate Burrito Price w Ship, and finally recalculate Total. However, if we instead recalculate Total first, we'll have to recalculate it a second time once the new $9 burrito price propagates down. If we don't calculate cells in the right order, this algorithm isn't better than recalculating the whole document. In some cases, it's as slow as VisiCalc!

Clearly, it's important for us to figure out the right order to update our cells. Broadly, there are two solutions to this problem: dirty marking and topological sorting.

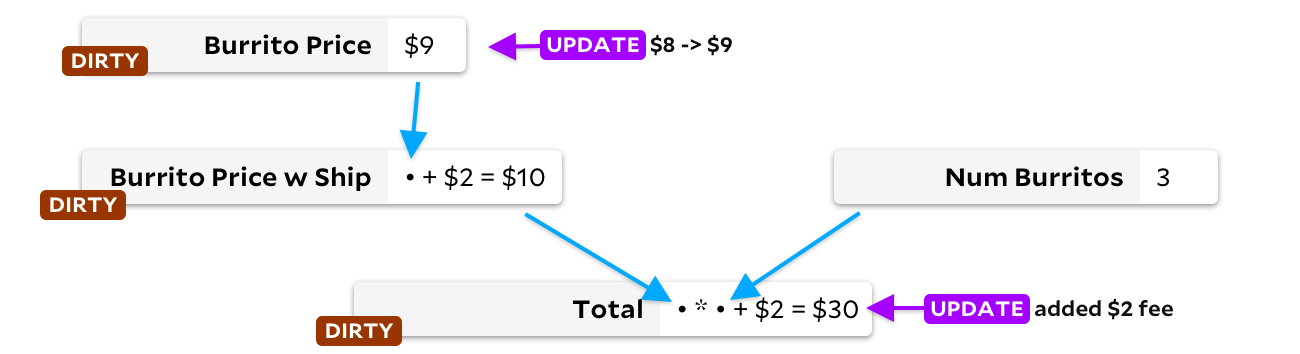

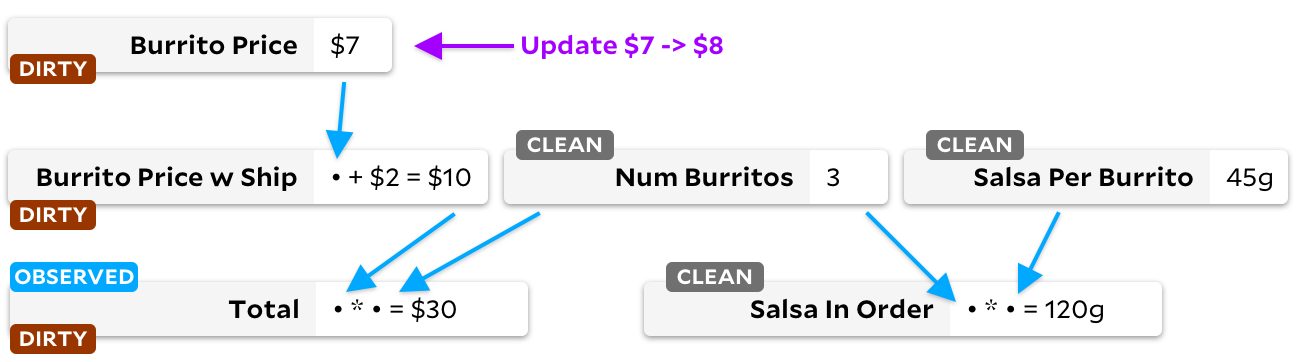

This first solution involves marking all cells downstream from an edit as dirty. For instance, when we update Burrito Price, we would mark the downstream cells Burrito Price w Ship and Total as dirty, even before doing any recalculations:

Then, in a loop, we find a dirty cell that has no dirty inputs, and recalculate it. When there are no dirty cells left, we're done! This solves our ordering problem. There's one downside though — if a cell is recalculated and we find its new output to be the same as its previous output, we'll still recalculate downstream cells! A little bit of extra logic can avoid actually running the formula trouble in this case, but we unfortunately still waste time marking and unmarking a lot of cells as dirty.

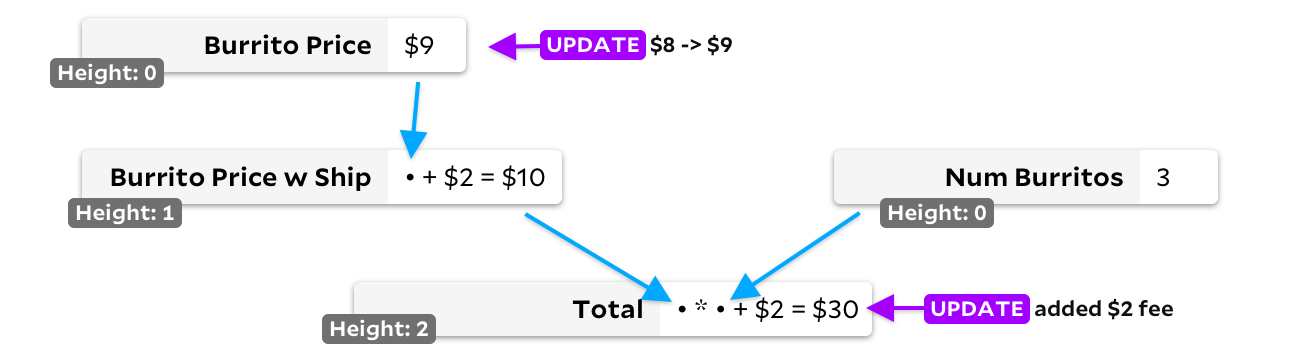

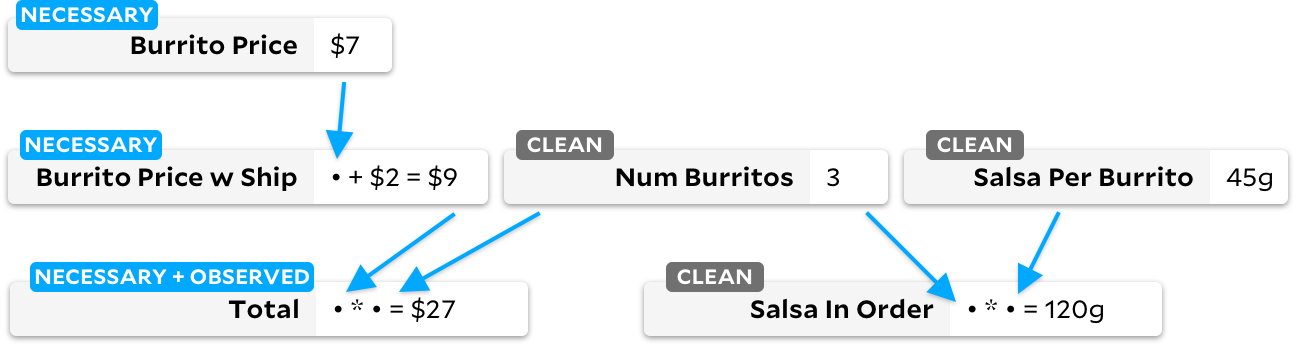

The second solution is topological sorting. If a cell has no inputs, we mark its height as 0. If a cell has inputs, we mark its height as the maximum of the heights of its inputs, plus one. This guarantees all cells have a greater height than any of their inputs, so we just keep track of all cells with a changed input, always choosing the cell with the lowest height to recalculate first:

In our double-update example, Burrito Price and Total would be initially added to the recalculation heap. Burrito Price has lesser height, and would be recalculated first. Since its output changes, we then would add Burrito Price w Ship to the recalculation heap, and since it too has less height than Total, it would be recalculated before we finally recalculate Total.

This has a big advantage over the first solution: no cell is ever marked dirty unless one of its inputs actually change. However, it requires we keep all cells pending recalculation in sorted order. If we use a heap, this results in an O(n log n) slowdown, so in the worst case, asymptotically slower than Lotus 1-2-3's strategy of recalculating everything.

Modern-day Excel uses a combination of dirty marking and topological sorting, which you can read more about in their docs.

demand-driven complications

We've now more or less reached the algorithms used in modern-day spreadsheet recalculation. Unfortunately, I suspect there is basically no business case to be made for ever improving it further. The few people with the problem "my Excel spreadsheet is too slow" have already written enough Excel formulas that migration to any other platform is impossible. Fortunately, I have no understanding of business, and so we're going to look at further improvements anyway.

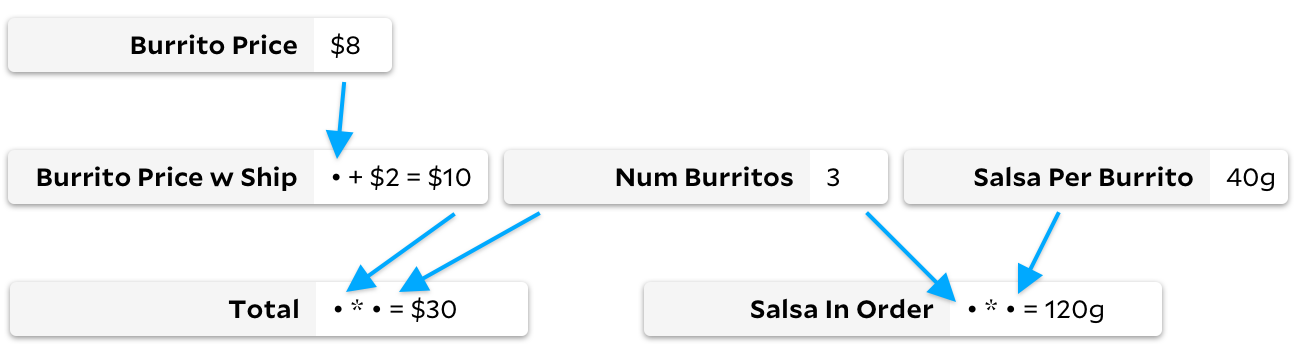

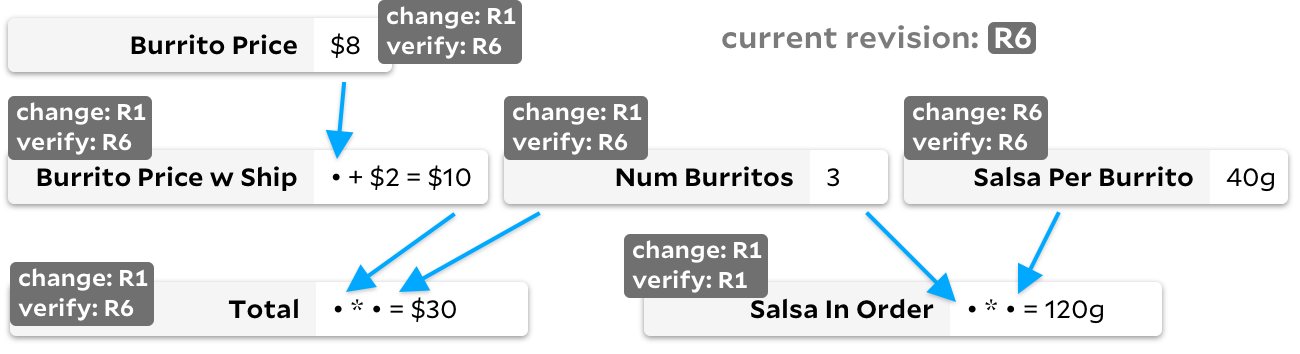

Beyond caching, one of the cool aspects of a spreadsheet-style computation graph is we can only calculate the cells that we're interested in. This is sometimes called lazy computation, or demand-driven computation. As a more concrete example, here's a slightly expanded burrito spreadsheet graph. This example is the same as before, but we've added what is best described as "salsa calculations". Each burrito contains 40 grams of salsa, and we perform a quick multiplication to know how much salsa is in our entire order. In this case, since our order has three burritos, there's a total of 120 grams of salsa in our entire order.

Of course, astute readers will have spotted the problem here already: knowing the total weight of salsa in an order is a pretty useless measurement. Who cares that it's 120 grams? What am I supposed to do with this information?? Unfortunately, a regular spreadsheet would waste cycles calculating Salsa In Order, even if we don't want it recalculated most of the time.

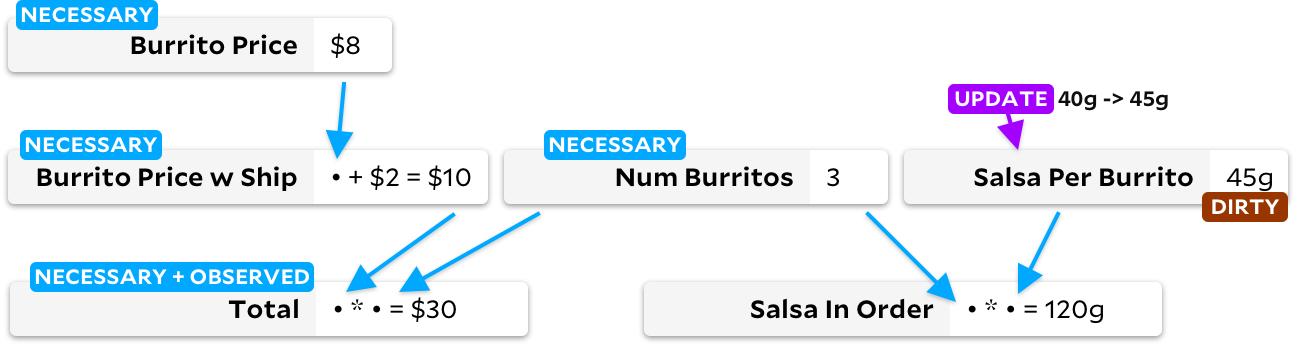

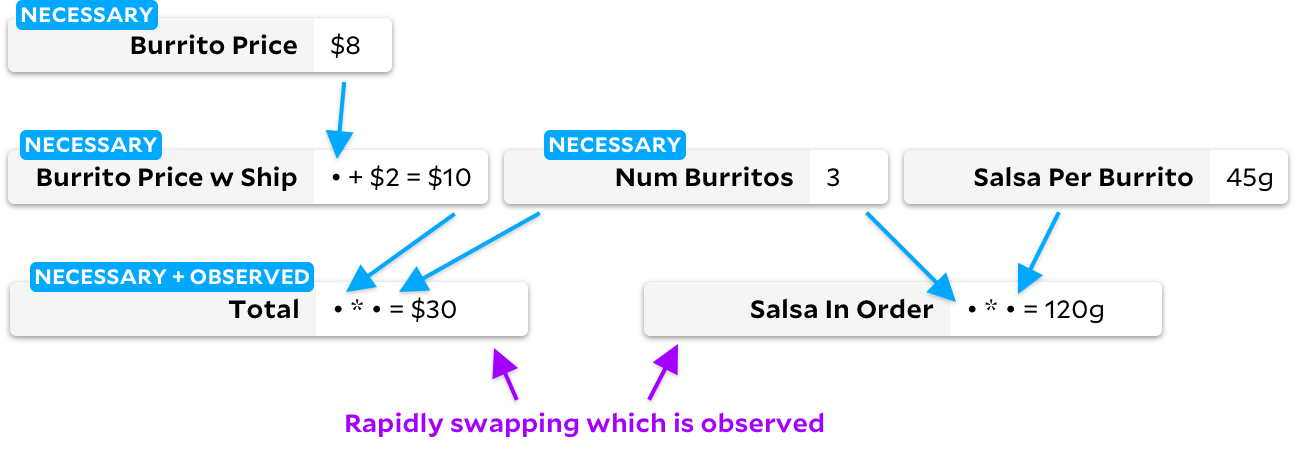

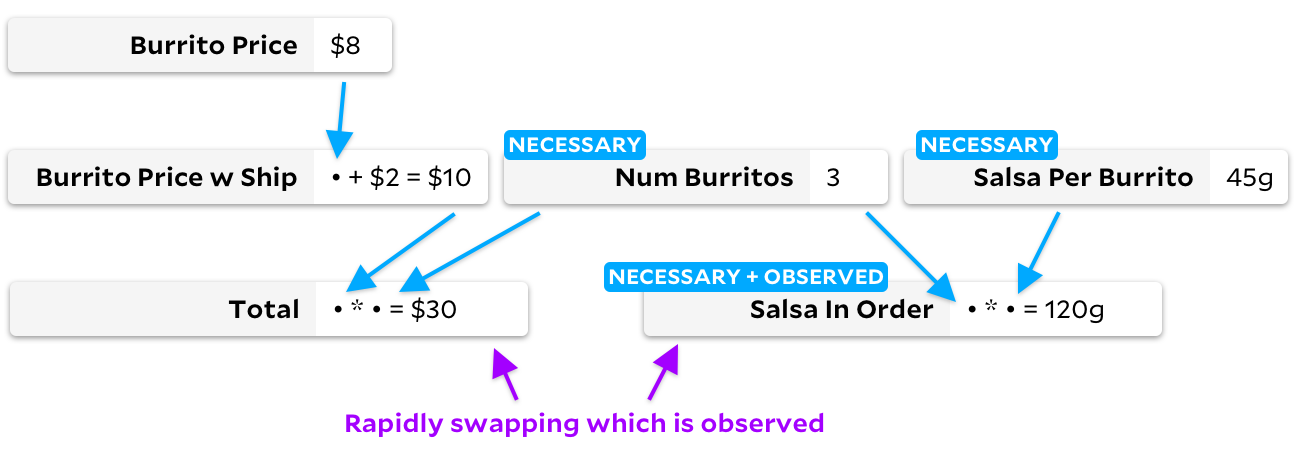

This is where demand-driven recalculation can help. If we could somehow specify that we're only interested in the output of Total, we could only recompute that cell and its dependencies, and skip touching Salsa In Order and Salsa Per Burrito. Let's call Total an observed cell, since we're trying to look at its output. We can also call both Total and its three dependencies necessary cells, since they're necessary to compute some observed cell. Salsa In Order and Salsa Per Burrito would be aptly described as unnecessary.

Some folks on the Rust team created the Salsa framework to solve this problem, clearly naming it after the unnecessary salsa calculations their computers were wasting cycles on. Salsa is really cool, and I'm sure they can explain how it works better than I can. Very roughly, they use revision numbers to track whether a cell needs recalculation. Any mutation to a formula or input increments the global revision number, and every cell tracks two revisions: verified_at to track the revision its output was last brought up-to-date, and changed_at to track the revision its output last actually changed.

When the user indicates they'd like a fresh value for Total, we'd first recursively recalculate any cell necessary to Total, skipping cells if their last_updated revision is equal to the global revision. Once the dependencies of Total are up-to-date, we only rerun the actual formula in Total if either Burrito Price w Ship or Num Burrito have a changed_at revision greater than the verified_at revision of Total. This is great for Salsa's purposes in the rust-analyzer, where simplicity is important and each cell takes a significant amount of time to compute. However, we can see the disadvantages in our burrito graph above — if Salsa Per Burrito constantly changes, our global revision number will frequently tick up. This will make each observation of Total walk the three cells necessary to it, even though none of those cells have actually changed. No formulas will be recalculated, but if the graph is large, repeatedly walking all of a cell's dependencies could get expensive.

faster demand-driven solutions

Instead of inventing new algorithms for demand-driven spreadsheets, what if we instead draw from the two classical spreadsheet algorithms mentioned earlier: dirty marking and topological sorting? As you might imagine, a demand-driven model complicates both of these, but both are still viable.

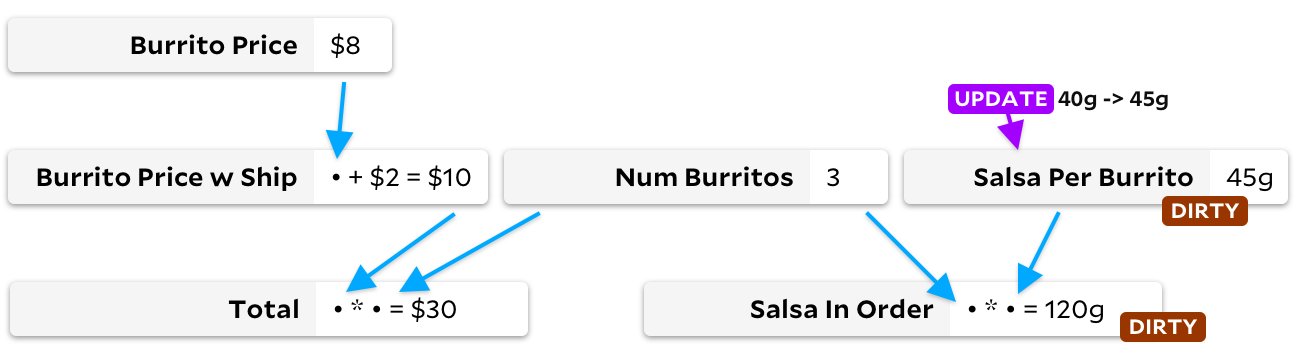

Let's first look at dirty marking. As before, when we change a cell's formula, we mark all downstream cells as dirty. So if we update Salsa Per Burrito, it would look something like this:

However, instead of immediately recomputing all cells that are dirty, we wait for the user to observe a cell. Then we run Salsa's algorithm on the observed cell, but instead of reverifying dependencies with outdated verified_at revision numbers, we instead only reverify cells marked as dirty. This is the technique used by Adapton. You'll note that when we observe Total, we'll find it isn't dirty, and so we can skip the graph walk that Salsa would have performed!

If we instead decide to observe Salsa In Order, we'll find it is marked as dirty, and so we'll reverify and recompute both Salsa Per Burrito and Salsa In Order. Even here, there are benefits over using just revision numbers, since we'll be able to skip a recursive walk on the still-clean Num Burritos cell.

Demand-driven dirty marking performs fantastically when the set of cells we're trying to observe changes frequently. Unfortunately, this has the same downsides as the dirty marking algorithm from before. If a cell with many downstream cells changes, we may waste a lot of time marking and unmarking cells as dirty, even if their inputs won't actually have changed when we go to recalculate them. In the worst case, each changes causes us to mark the entire graph as dirty, which would give us the same ~order of magnitude performance as Salsa's algorithm.

Moving on from dirty marking, we can also adapt topological sorting for demand-driven computations. This is the technique used by Jane Street's Incremental library, and requires major tricks to get right. Before we added demand-driven computations, our topological sorting algorithm used a heap to determine which cell would be recomputed next. But now, we only want to recompute cells that are necessary. How? We don't want to walk the entire tree from our observed cells like Adapton, since a complete tree walk defeats the entire purpose of topological sorting, and would give us performance characteristics similar to Adapton.

Instead, Incremental maintains a set of cells that the user has marked observed, as well as the set of cells that are necessary to any observed cell. Whenever a cell is marked observed or unobserved, Incremental walks that cell's dependencies to make sure the necessary marks are applied correctly. Then, we only add cells to the recalculation heap if they're marked necessary. In our burrito graph, if only Total is part of the observed set, changing Salsa in Order would not result in any walking of the graph, since only necessary cells are recomputed:

This solves our problem without eagerly walking the graph to mark cells as dirty! We still have to remember that Salsa per Burrito is dirty, since if it later becomes necessary, we will need to recompute it. But unlike Adapton's algorithm, we don't need to push this single dirty mark down the entire graph.

anchors, a hybrid solution

Both Adapton and Incremental walk the graph, even when not recomputing cells. Incremental walks the graph upstream when the set of observed cells changes, and Adapton walks the graph downstream when a formula changes. With these small graphs, it may not be immediately apparent that this graph walking is expensive. However, if your graph is large and your cells are relatively cheap to compute — often the case with spreadsheets — you'll find that most of your costs come from wasted graph walking! When cells are cheap, marking a bit on a cell can cost roughly the same as just recomputing the cell from scratch. So ideally, if we want our algorithm to be substantially faster than computing from scratch, we've got to avoid unnecessarily walking the graph as best we can.

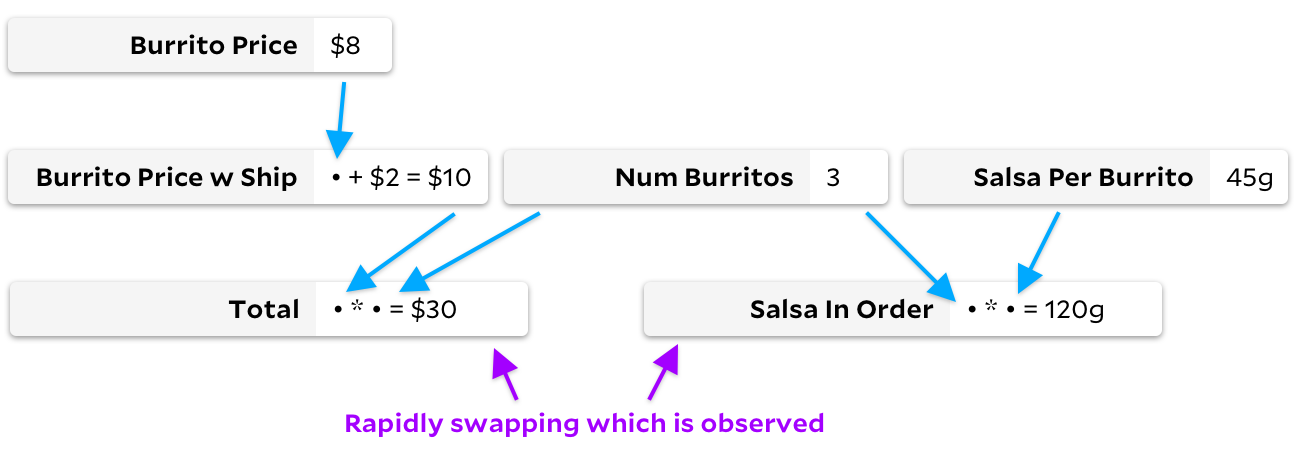

The more I thought about the problem, the more I realized that they waste time walking the graph in roughly opposite situations. In our burrito graph, let's imagine cell formulas rarely change, but we rapidly switch between first observing Total, and then observing Salsa in Order.

In this case, Adapton will never walk the tree. No inputs are changing, and so we never need to mark anything as dirty. Since nothing is dirty, each observation is cheap as well, since we can simply return the cached value from a clean cell immediately. However, Incremental performs poorly in this example. Even though no values are ever recomputed, Incremental will repeatedly mark and unmark many cells as necessary and unnecessary.

In the opposite case, let's imagine a graph where our formulas are rapidly changing, but we don't change which cells we're observing. For instance, we could imagine we're observing Total while rapidly changing Burrito Price from 4+4 to 2*4.

Just like the previous example, we aren't really recomputing many cells. 4+4 and 2*4 both equal 8, so ideally we only recompute that one bit of arithmetic when the user makes this change. However, unlike the previous example, Incremental is now the library that avoids tree walking. With Incremental, we've cached the fact that Burrito Price is a necessary cell, and so when it changes, we can recalculate it without walking the graph. With Adapton, we waste time marking Burrito Price w Ship and Total as dirty, even though the output of Burrito Price won't have changed.

Given each algorithm performs well in the other's degenerate cases, wouldn't it be ideal if we could just detect those degenerate cases, and switch to the faster algorithm? This is what I have attempted to do with my own library, Anchors. Anchors runs both algorithms simultaneously on the same graph! If this sounds wild and unnecessary and overly complicated, that's probably because it is.

In many cases, Anchors follows Incremental's algorithm exactly, which avoids Adapton's degenerate case above. But when cells are marked as unobserved, its behavior diverges slightly. Let's look at what happens. We start with marking Total as observed:

If we then mark Total as unobserved and Salsa in Order as observed, the traditional Incremental algorithm would alter the graph to look like this, walking through every cell in the process:

Anchors also walks every cell for this change, but instead produces a graph that looks like this:

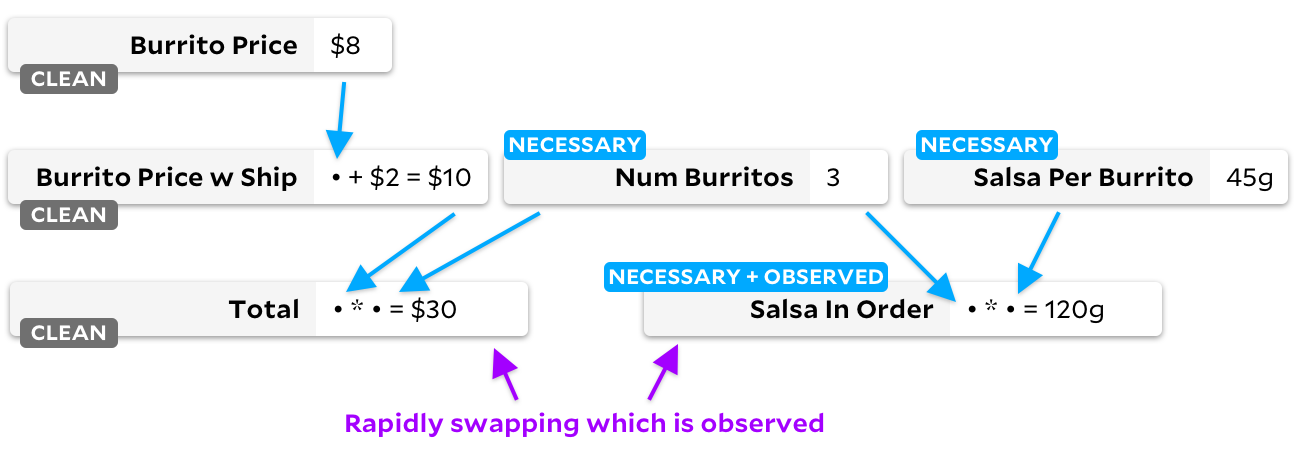

Note the "clean" flags! When a cell is no longer necessary, we mark it as "clean". Let's look at what happens when we switch from observing Salsa in Order to Total:

That's right — our graph now has no necessary cells. If a cell has a "clean" flag, we never mark it as observed. At this point, no matter what cell we mark as observed or unobserved, Anchors will never waste time walking the graph — it knows that none of the inputs have changed.

Looks like there's a discount at El Farolito! Let's drop Burrito Price by a dollar. How does Anchors know Total needs to be recomputed? Before we recompute any formulas, let's look at how Anchors will see the graph right after we change just Burrito Price, before recomputing anything:

If a cell has a clean flag, we run the traditional Adapton algorithm on it, eagerly marking downstream cells as dirty. When we later run the Incremental algorithm, we can quickly tell that there is an observed cell marked as dirty, and know we need to recompute its dependencies. After that's finished, the final graph will look like this:

We only recompute cells if they're necessary, so whenever we recompute a dirty cell, we also mark it as necessary. At a high level, you can imagine these three cell states form a looping state machine:

On necessary cells, we run the Incremental's topological sorting algorithm. On unnecessary cells, we run the Adapton algorithm.

burrito syntax

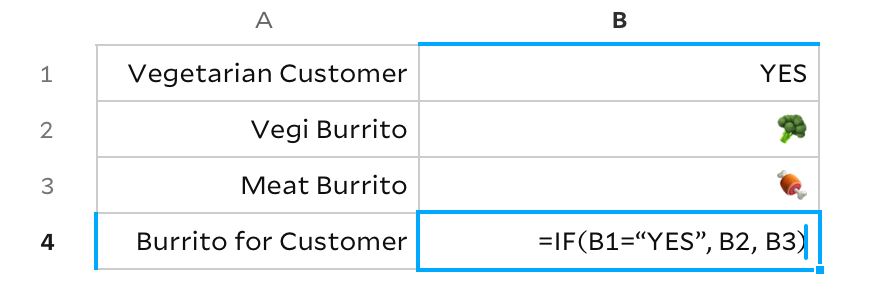

I'd like to finish up by answering a final question: so far, we're been discussing the many problems the demand-driven model causes for our recomputation strategy. But the problems aren't just for the algorithm: there are syntactic problems to solve too. For instance, let's make a spreadsheet to select a burrito emoji for our customer. We'd write an IF statement in the spreadsheet like this:

Because traditional spreadsheets aren't demand-driven, we can compute B1, B2, and B3 in any order, so long as we compute our IF cell after all three inputs are ready. In a demand-driven world however, if we can calculate the value of B1 first, we can peek at the value to know which of B2 or B3 we need to recalculate. Unfortunately, a traditional spreadsheet's IF has no way to express this!

The problem: B2 simultaneously references cell B2 and retrieves its value, 🥦. Most demand-driven libraries mentioned in this post instead explicitly distinguish between a reference to a cell and the act of retrieving its actual value. In Anchors, we call this cell reference an Anchor. Much like a burrito in real life wraps a bunch of ingredients together, the Anchor<T> type wraps T — which I suppose makes our vegi burrito cell Anchor<Burrito>, a sort of ridiculous burrito of burritos. Functions can pass around our Anchor<Burrito> as much as they'd like — it's only when they actually unwrap the burrito to access the Burrito inside that we create a dependency edge in our graph, indicating to the recomputation algorithm that the cell may be necessary and need recomputing.

The approach taken by Salsa and Adapton is to use function calls and normal control flow as a way to unwrap these values. For instance, in Adapton, we might write our "Burrito for Customer" cell something like this:

let burrito_for_customer = cell!({

if get!(is_vegetarian) {

get!(vegi_burrito)

} else {

get!(meat_burrito)

}

});

By distinguishing between a cell reference (vegi_burrito here) and the act of unwrapping its value (get!), Adapton can piggy-back on top of Rust's control flow operators like if. This is a great solution! However, a little bit of magical global state is needed to correctly connect the get! calls to the cell! that needs recomputing when is_vegetarian changes. The approach I've taken with Anchors, inspired by Incremental, is a slightly less magical system. Similar to a pre-async/await Future, Anchors allows you to use operations like map and then on an Anchor<T>. For instance, the example above would look something like this:

let burrito_for_customer: Anchor<Burrito> = is_vegetarian.then(|is_vegetarian: bool| -> Anchor<Burrito> {

if is_vegetarian {

vegi_burrito

} else {

meat_burrito

}

});

further reading

In this already long and winding blog post, there is so much I didn't have space to talk about. Hopefully these resources can shed a little bit more light on this very interesting problem.

- Seven Implementations of Incremental, a great in-depth look at Incremental internals, as well as a bunch of optimizations like always-increasing heights I didn't have time to talk about, as well as clever ways to handle cells that change dependencies. Also worth reading from Ron Minsky: Breaking down FRP.

- Salsa's explanation videos explains how it works quite well.

- This Salsa issue, where you can watch Matthew Hammer, creator of Adapton, patiently explain cutoffs to some rando (me) who repeatedly fails to understand how they work.

- Raph Levien's Rustlab keynote has some really interesting stuff about this topic in the context of GUIs. In response to this post, he says How Autotracking Works and Autotracking: Elegant DX Via Cutting-Edge CS are great resources.

- I have to admit I don't actually understand Differential Dataflow, but it's the backbone of the Materialize database. Query planning in general seems like maybe a solution to the big cache missing problems that many of these frameworks suffer from? It doesn't have built-in demand-driven computation, but Jamie Brandon (thanks!) kindly pointed me to their blog post Lateral Joins and Demand-Driven Queries which explains how you can add demand as an input. Also of relevance is the Noira project from MIT.

- Adapton's documentation is very comprehensive.

- Turns out this way of thinking also applies to build systems.

- Slightly Incremental Ray Tracing is a slightly incremental ray tracer written in Ocaml.

- Just saw Partial State in Dataflow-Based Materialized Views today and it seems relevant.

- Gustav Westling (thanks!) pointed out Build Systems à la Carte is one of the first papers about the similarities between build systems and spreadsheets.

- Skip and Imp also look really interesting.

And of course, you can always check out the framework I built, Anchors.

Thanks to Syd Botz, Adam Perry, and Peter Stefek for feedback and discussion!

...or for a change of pace, perhaps you'd enjoy my other blog Agoraphobia →